If I were to write about user research 5 years ago, half of this article would focus on convincing people to include research as part of their product development cycle. However, that time is long gone. Now everyone is customer obsessed. Most product teams spend hours talking to customers in an attempt to empathise before designing products and solutions. For a second, we thought, “What a great shift!”, then we paused to take a closer look at the data that was being passed as evidence to launch a product. We were mortified.

We quickly realised that people have yet to understand the difference between reliable and unreliable data and the difference between factual evidence and opinions. Most businesses are making decisions based on users’ opinions which likely lead to a really frustrating product development journey. We’ll talk more about this later in this article.

Now, user research is not rocket science, but just like any other skill, you need to practise in order to get good at it. For some reason, most companies will send their least trained employees, or even interns, to go out and talk to customers. For them, if you can talk and ask questions, you can do user research. “This is the set of questions, now go wild!” is often the attitude, but really, there is an art to interviewing and doing user research that yields truly useful results.

This article is about avoiding these fundamental mistakes so that you get reliable, useful and usable data. We’ll first discuss common mistakes and then we will go through small steps you can take to improve your practice.

Mistakes

- Collecting opinions, not facts

- Imposing Quantitative methods on Qualitative data

Steps to improve

- Using Themed questions

- Insights: more than just findings

Collecting opinions, not facts

In user research, the people you are talking to and interviewing are naturally inclined to be helpful and will give a lot of feedback that they think is important. Be wary, though, as a lot of the “feedback” you get is actually opinions if you don’t ask the right questions or if you ask in the wrong way. One of the most common research mistakes that people make is to interpret opinions as facts. Opinions are dangerous because they change, are subjective and are inconclusive. If you build your products/services based on them, your product can become irrelevant pretty much anytime because opinions shift and are heavily influenced by how a user feels at the time. The focus should always be on how users use your product instead of what they think of it.

Facts, on the other hand, are concrete. You want to dig into the facts of users’ lives – pain points, behaviour and what solutions they deploy. The focus should be on understanding if there are any existing habits, like how they usually tackle a problem, or technology that you can ride on for users to easily adopt your solution as part of their routine. These all need to be taken into consideration as you design the solution. It is the holistic journey of how they will adopt your solution and not about whether they like it.

In order to collect facts from a user, you need to ask the right question. I usually divide my questions into three parts and types: context specific, product specific and opinion-based. Most of my focus will be context and product specific as useful insights will mostly come from these two areas. I often ask for examples so users will tell me stories which are facts. If I have extra time to kill, I’ll squeeze in some opinion based questions. Let’s run through an example:

Research Goal: Find out how might we improve Jira as a product for task management.

| Question Type | Example |

|---|---|

| Context-specific | Can you run us through your usual routine when you first reach the office until the end of the day? What are the tools you use for your own task management? How do you plan your daily and weekly tasks? How do you update your team members or supervisor on your tasks progress? |

| Product specific | Can you show us the feature you use the most in Jira? Why? Can you tell us when you last opened Jira and why? |

| Opinion based | Can you show us the feature you like the most on Jira? Why? If there is a feature you wish Jira had, what would that be? |

The first two types of questions will give you richer data about a user. It allows you to:

- Imagine/understand how your users use your product as part of their daily routine

- Decide on information hierarchy, i.e. prioritisation of what to show or provide your users with, as you now know what important information they need to complete their tasks

- Decide on your non-negotiable features and “nice to have” features

Qualitative vs. Quantitative Data: More does not necessarily mean more

Most companies are familiar with quantitative rather than qualitative data which means that they are skeptical of research that has a small sample size. After all, when learning about research and statistics in school, we were always taught that a larger sample means more reliable results. As a result, and from my own experience, most teams resolve to increase the number of research participants to double, or even triple that of a qualitative study. The outcome is often remote, unmoderated testing or well-curated surveys where the focus is on getting a large number of responses rather than getting rich, quality data. What’s worse is when they quantify the results and turn them into graphs making things look more scientific than they are.

It is not that quantitative data is bad, but you shouldn’t try to use quantitative methods on qualitative data. You need to first understand the differences between the two and what they can be used for. Quantitative is used to segment and identify your target market and qualitative is used to gain an understanding of the underlying reasons, opinions and motivations of your target market.

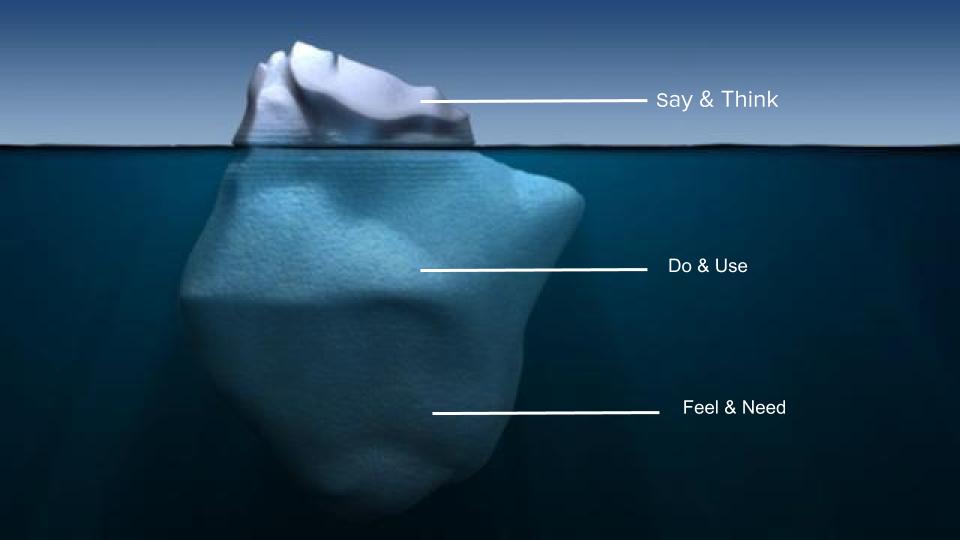

Using quantitative methods on qualitative may mean testing the product on and talking with more people to get more results but this does not necessarily lead to more insights, just a lot more work. Be aware that there is a difference between the two types of data. If you want to understand the user beyond the surface, you should focus more on qualitative data. It is not just about what they say and think, but finding out what they feel and discovering their unmet needs. This is something that you almost certainly cannot achieve through a survey.

Doing better research

It’s not hard to change your research methodology a little to get better quality data. Here’s a couple of suggestions.

Using Themed questions

When you design your question guide, start with broad themes. Then test it.

Themes will help you create categories on different kinds of data you want to collect. Once this is done, flesh out a few questions under each theme and test it with people around you. From their answers, you will know the questions to ask to solicit relevant data. Let’s look at an example:

Research question: How might we help our youths get better at saving money?

| Themes | Spending habits | Current saving methods | Motivation to start saving |

|---|---|---|---|

| Question guide | When was the last time you purchased something that you later regretted? Can you tell us about this experience? | What is the most effective personal saving methods you have tried so far? Tell us about this experience. If none. What are the top 3 reasons stopping you from saving? | What is an ideal retirement experience for you? When was the last time you were trapped in an emergency situation and money was an issue? |

Insights: more than just findings

Good researchers are great at spotting patterns and findings. Great researchers take the results, analyse them and can derive insights to inform breakthrough solutions. Deeper insights are truths that are unearthed by continuously asking “why” to get to the heart of the problem.

Identifying the difference between a finding and an insight is like a muscle that needs to be strengthened. The key is to always be curious about the deeper meaning of someone’s behaviour. “People watching” is one of the best exercises to train your eyes to identify interesting findings that can lead to deeper insights. When you are in a public space, observe what people around you are doing or carrying. Question why they’re doing this and if you are bold enough, casually chat them up to understand them better. If this is too much of a challenge, try observing and chat up your colleagues instead.

Example of Findings vs Insights

| Topic | Finding | Insight |

|---|---|---|

| Why patients did not adhere to their medicine intake schedule The researchers ran a few user interview sessions with patients and people working with patients as well | It’s a tedious job for patients to refill their own medicine, and once they finish it, they don’t get a refill and do not resume their course of medication | People don’t feel the urgency to take medicine when symptoms are no longer showing, so they delay refilling their prescriptions and eventually discontinue doing so |

| Why keeping a good diet is hard for people The researcher ran user interview sessions with their target audience who have tried a healthy diet before and failed | Lack of support and accountability to keep things on track | People avoid accountability in diets in large part because of their fear of failure and are afraid of humiliating themselves. |

Small steps, quick wins

Overall, the good news is that user research is just like any other skill; it requires practice to get better. These are small, easy steps you can start adopting as part of your practice. If you take small steps to avoid these fundamental mistakes as a part of your current user research practice, we are sure that the time you invest into talking to your users will be more productive and will yield better results. Let’s move the needle on the user research scale from “awareness” to “nailing it”.