“Our analytics show users are completing their tasks,” the enterprise product owner told me confidently. “The features are all functioning as designed.” Yet adoption wasn’t growing as expected and user satisfaction scores were stagnant.

This scenario plays out more often than you might think – products that work perfectly fine on paper, but somehow fail to truly resonate with users in practice.

Why teams skip live testing (and why that’s understandable)

Let’s be honest – when you’re managing a product team, live testing often feels like a luxury you can’t afford. You’re juggling feature requests, fixing bugs, meeting deadlines and keeping stakeholders happy. The product is live, metrics are “okay,” and testing seems like something that can wait.

We get it. We’ve worked with enough product teams to understand the reality:

- Your backlog is already overwhelming

- Resources are stretched thin

- Stakeholders want new features, not refinements

- Getting access to real users is challenging

- Testing could disrupt existing workflows

But what I’ve learned time and time again remains true: skipping proper testing now almost always costs more in the long run. When products evolve without deep user understanding, teams end up building features that don’t solve real problems, fixing symptoms instead of root causes, and missing opportunities for genuine improvement.

What live testing really reveals: three stories

Story 1: The Trust Deficit

A few years ago, while investigating an underused performance monitoring feature in an enterprise reporting application, we discovered something unexpected. Users had developed elaborate verification routines, double-checking every number in Excel. Why? A lack of trust in the data, stemming from inconsistent data input and unclear data lineage.

The solution wasn’t in the dashboard design – it was in fixing the upstream data collection process and making data sources transparent.

Impact: The improved data collection process and transparency transformed how teams used the system. Analysts stopped maintaining parallel Excel sheets and began trusting the platform for critical decisions. Team leads reported more confident decision-making in planning meetings and the platform became their single source of truth for performance discussions.

Story 2: The Presentation Paradox

We were brought in to find out why a reporting dashboard was failing to drive action in executive meetings, despite its sophisticated analytics capabilities. Live testing revealed the core issue: we were forcing a long-form analysis tool to serve as a presentation medium.

This led to a breakthrough solution – creating a separate presentation mode that prioritised narrative flow over deep analysis, allowing presenters to tell clear data stories while maintaining access to detailed insights.

Impact: The new presentation mode transformed how teams communicated data insights. What was once a source of confusion in executive meetings became a powerful storytelling tool. Leaders could now focus on strategic implications rather than questioning the numbers, leading to more decisive actions based on data insights.

Story 3: Hidden Opportunities

Late last year, I was part of the team at Stampede who were tasked to conduct a UX gap analysis for Malaysian government. We tested a startup ecosystem accelerator website with real users to identify the gap, but walked away with so much more. Not only did we identify immediate usability issues and recommended practical fixes, we also discovered unexpected ways users were finding value in the platform.

Impact: Beyond addressing the immediate usability issues, the insights led to the development of new services that better matched how startups were actually using the platform. The client was able to evolve their offering from a simple resource website to a more comprehensive support platform that better served their ecosystem’s needs.

The Stampede Approach: What’s the (whole) story?

While many testing approaches focus on isolated aspects like UI or functionality, our methodology is designed to give product teams a holistic, more complete picture. To us, real insight comes from understanding not just how users interact with your product, but how your product fits into their broader work life.

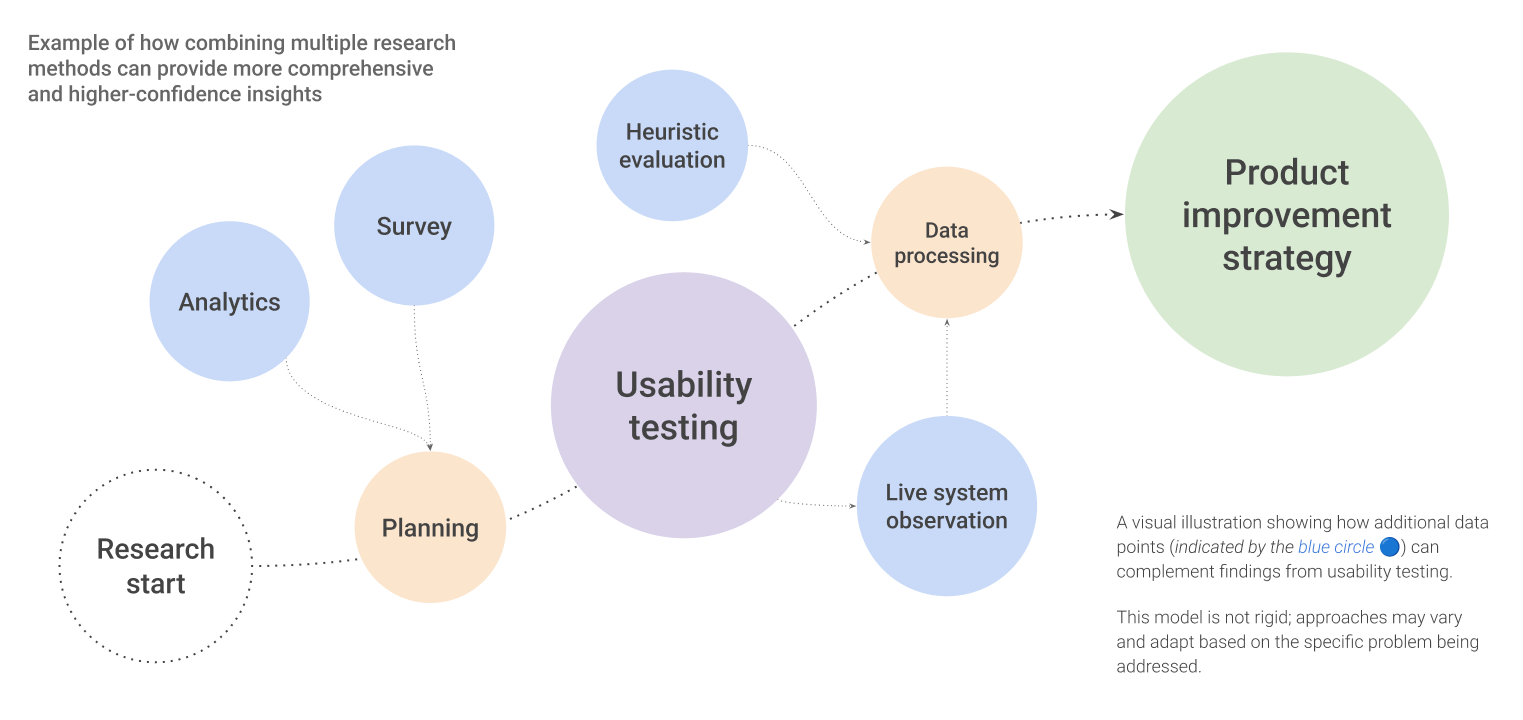

Our approach combines both quantitative and qualitative methods, because we’ve learned that neither tells the complete story alone. When we spot a pattern in the data, we investigate the “why” through careful observation. When we observe interesting behavior, we validate its significance through data. This dual-lens approach helps establish clear causation, not just correlation.

Making it work: our testing methodology

Live product testing isn’t just about watching users click through interfaces. It’s a carefully orchestrated process that begins long before we meet any users and continues well beyond the testing sessions themselves. The team has refined our methodology through years of testing products across different scales and industries, from startup platforms to enterprise systems.

Here’s what we do and why they are effective.

Setting the foundation well

We begin by understanding the product context deeply. Having focused conversations with stakeholders to understand business goals and constraints, reviewing analytics to spot patterns worth investigating and designing research that fits your specific situation are all necessary homework. We’re particularly careful about selecting participants who can provide relevant insights – people who use your product in meaningful ways as part of their daily work.

As UX designers, what we look for is striking the right balance between rigour and flexibility. While we have a structured approach, we stay adaptable to product team’s needs and constraints. This thoughtful preparation helps us maximise the value of every testing session while minimising disruption to their operations.

Testing approaches

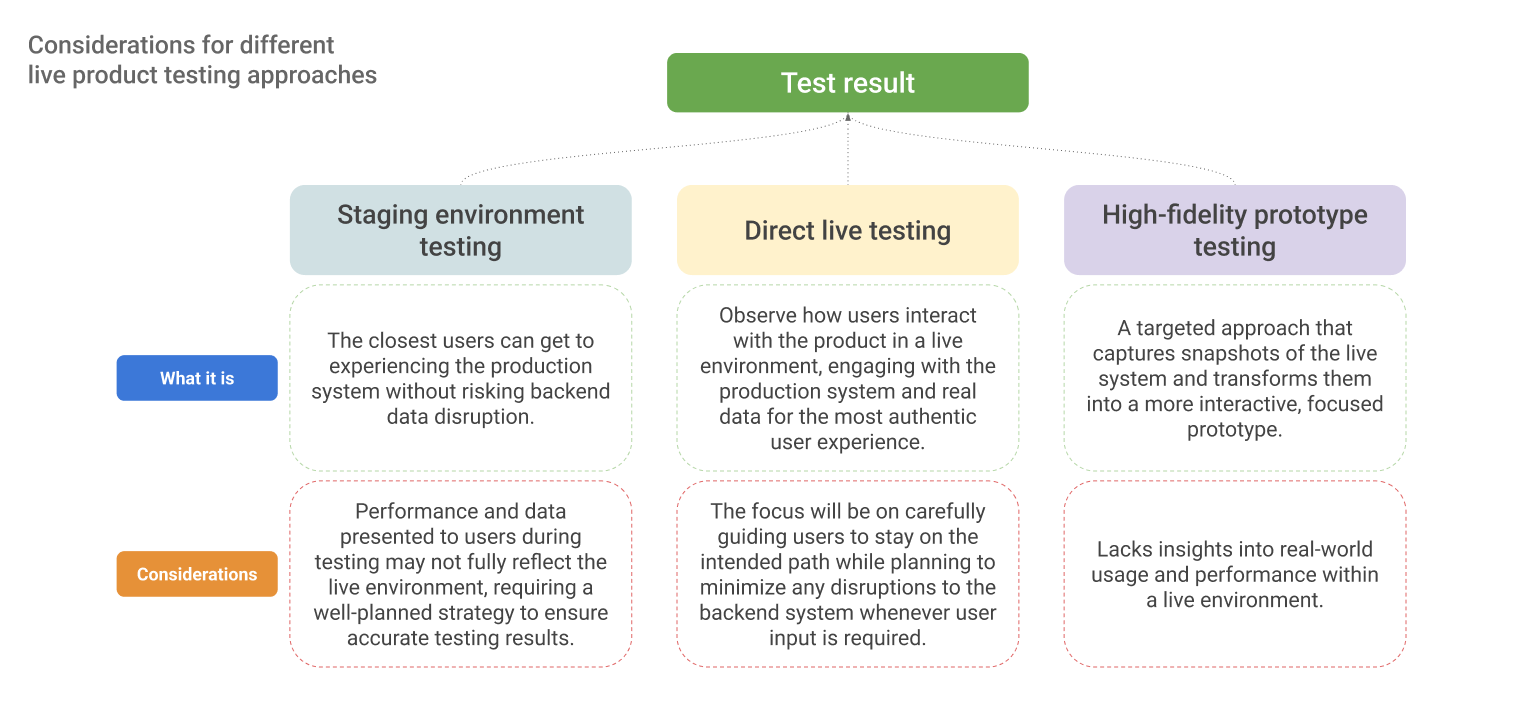

1. Direct Live Testing

We observe users interacting with your actual live product in their natural work environment. This means watching real tasks being completed with real data and real stakes. It’s like shadowing a user through their workday, observing not just how they use your product, but how they integrate it into their broader workflow. While this approach requires careful planning to avoid disrupting production systems, it often reveals the most valuable insights about how your product is actually being used in the wild.

2. Staging Environment Testing

Think of this as a dress rehearsal with your product. We create a testing environment that mirrors your production system, complete with realistic data and workflows, but in a controlled space where we can safely observe user behaviour. This approach is particularly valuable for financial systems, healthcare platforms, or any product where testing in production isn’t feasible. The key is making the staging environment feel real enough that users interact with it naturally.

3. High-Fidelity Prototype Testing

Sometimes we need to recreate specific parts of your live product as an interactive prototype. This might be because we want to test a particular user journey in isolation or we need to simulate a specific state of your product that’s hard to replicate in the live environment. As we carefully rebuild these scenarios, we can focus user attention exactly where we need it and iterate quickly on potential solutions.

Multi-method integration

Sometimes the most powerful insights come from combining methods:

- Using analytics to identify patterns, then investigating through live testing

- Combining prototype testing with live system observation

- Validating qualitative insights with quantitative data

- Cross-referencing findings across different user groups and contexts

When to consider live testing

Every product team faces moments when they need deeper insights than analytics alone can provide. Perhaps your metrics look good but user feedback suggests otherwise. Or maybe you’re planning a major evolution of your product and need to ensure you’re moving in the right direction.

Live product testing delivers particular value when:

- User adoption isn’t meeting expectations despite good technical performance

- Users are creating workarounds to your carefully designed processes

- You’re seeing high task completion rates but low return usage

- Feature usage patterns don’t align with your product strategy

- You’re planning significant product evolution and need ground truth

Often, teams discover that live testing would have helped them avoid months of misdirected development effort. The insights gained typically go far beyond the initial scope of investigation, revealing opportunities for improvement that weren’t visible through other methods.

Ready to bridge the gap?

So what could look like a simple usability issue at first, could signals a deeper opportunity to make your product truly work for your users.

This is what fascinates me about live product testing: the way small, everyday user behaviours can point us toward significant opportunities. Time and again, I’ve seen how these insights help teams avoid months of building solutions for the wrong problems.

Your product likely has similar stories waiting to be uncovered. While you focus on keeping your product running and delivering new features, we can help you spot these patterns and understand what they’re telling you about your users’ real needs.

—

If you’re wondering about the hidden potential in your digital product, we’d love to hear your story. Reach out to [email protected]

This post is part of our ongoing series about evidence-based product evolution. Follow us for more insights about building products that stand the test of time.